What Is Real-Time Data Processing & Why It’s Reshaping Business Operations

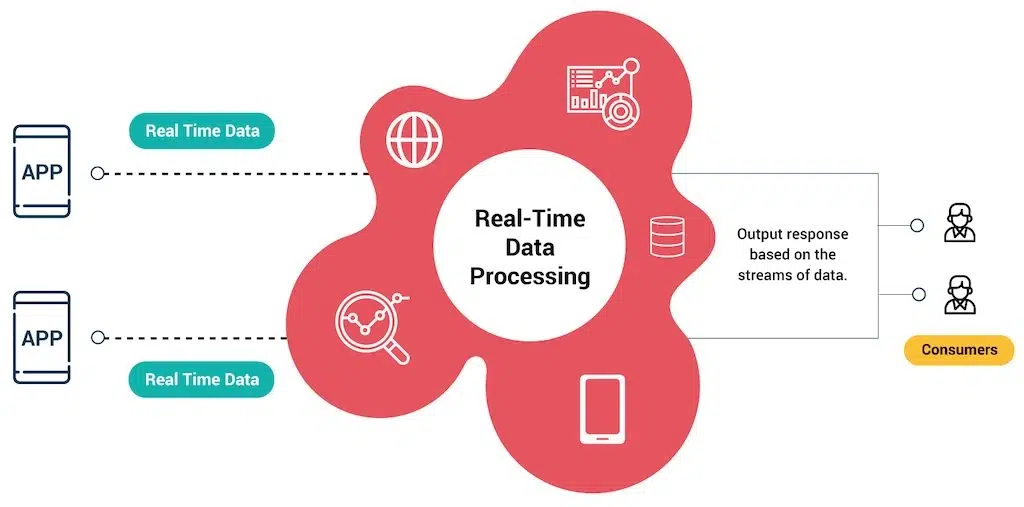

Real-time data processing is redefining how enterprises operate and compete in today’s fast-moving digital world. This approach allows businesses to collect and analyze live data streams within seconds, enabling immediate responses to changing conditions. By integrating digital transformation strategies with real-time capabilities, organizations can accelerate decision-making, personalize customer experiences, and boost operational efficiency.

This article explores what real-time data processing is, why it matters, and how adopting it can reshape business operations for long-term growth and sustainable competitive advantage.

1. What Is Real-Time Data Processing

Real-time data processing is the capability to capture, handle, and analyze information the moment it is created. Understanding why data collection is important becomes even more critical in real-time environments, as the immediate capture and processing of relevant data directly impacts business outcomes. It allows data to be processed and delivered for use almost instantly. This empowers organizations to make quick, informed decisions using the most current information available.

Businesses often adopt real-time systems when immediate data access is essential, such as financial trading, e-commerce platforms, or online gaming environments. It is important to distinguish real-time processing from stream processing. Stream processing focuses on continuously handling data streams as they arrive, while real-time processing emphasizes timely responses to input data. Most stream processing platforms operate in real-time, though not all real-time systems are stream-based.

Based on timing constraints, real-time processing includes three categories:

- Hard real-time systems must meet every deadline without failure, often used in aviation or healthcare where delays cause critical harm.

- Soft real-time systems aim to meet deadlines but can occasionally miss them without serious impact, such as in video streaming or gaming.

- Robust real-time systems prioritize meeting deadlines above accuracy or performance, treating late data as invalid rather than a system error.

2. Real-Time vs. Batch Data Processing

Organizations often choose between real-time data processing and batch processing when designing their data architecture. These two approaches handle information in very different ways.

Understanding their differences helps enterprises select the most suitable method for their operational and strategic goals. While batch processing groups data for scheduled analysis, real-time systems handle information instantly as it arrives, enabling immediate decision-making.

| Aspect |

Real-Time Data Processing |

Batch Data Processing |

| Processing Method | Handles each data item the moment it is generated | Collects large data sets and processes them at scheduled intervals |

| Latency | Extremely low latency with instant availability | Higher latency due to waiting for batch completion |

| Decision-Making Speed | Enables immediate responses based on live data | Delayed decisions as insights appear after batch cycles |

| Use Cases | Financial trading, e-commerce personalization, IoT monitoring, fraud detection | Payroll processing, billing cycles, monthly reporting, data archiving |

| Infrastructure Needs | Requires continuous computing resources and high system availability | Requires less continuous computing but high capacity during scheduled jobs |

| Complexity | More complex to build, scale, and maintain | Simpler architecture and easier to manage |

| Cost Considerations | Often more expensive due to 24/7 resource allocation | Usually more cost-efficient for large but infrequent workloads |

| Data Freshness | Provides constantly updated data for instant insights | Provides snapshots of past data after scheduled processing |

| Error Handling | Errors must be handled instantly to avoid data loss | Errors can be corrected during scheduled reprocessing |

| Typical Technologies | Event streaming platforms, in-memory computing, microservices | ETL pipelines, data warehouses, batch schedulers |

3. Key Benefits of Real-Time Data Processing

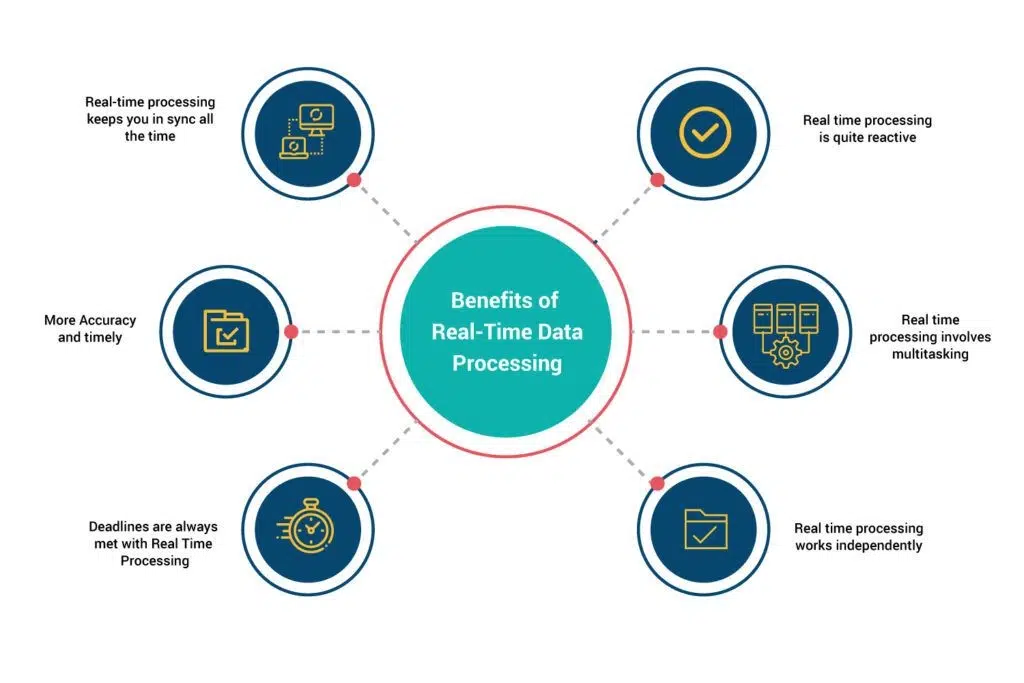

This section explores 6 key benefits of real-time data processing. Each plays a crucial role in helping businesses operate faster, smarter, and more efficiently in competitive markets.

3.1. More Accuracy and Timely Insights

Real-time data processing eliminates delays that often reduce data accuracy in traditional batch systems. Access to fresh information allows decisions based on current conditions, not outdated reports.

According to a survey by InterSystems, over 75% of organizations said untimely data has caused them to miss business opportunities. This shows how critical timely insights are for enterprise growth.

Financial services use real-time analytics to detect fraud within milliseconds, preventing losses. This approach aligns with current data analytics trends that emphasize immediate insights and automated decision-making processes.. Healthcare teams monitor live patient vitals to respond immediately to health risks. Manufacturers use instant production data to stop defective batches before they reach customers.

By relying on real-time accuracy, businesses reduce risks, improve forecasting, and build trust in data-driven strategies. Real-time systems turn data into a dependent decision-making asset rather than a delayed reference.

3.2. Keeping Operations in Continuous Sync

Real-time data processing keeps data synchronized across every department, removing the silos that often slow down collaboration. Sales teams can see current inventory while processing orders. Logistics managers can reroute deliveries using live location data. Support teams can access real-time customer behavior to resolve issues faster.

Executives benefit from unified dashboards that combine data from multiple units, allowing decisions based on actual conditions rather than outdated snapshots. This synchronization improves agility, reduces miscommunication, and keeps organizations aligned toward shared goals.

3.3. Highly Reactive Decision-Making

Real-time data processing allows organizations to react immediately to new events, customer behaviors, or market shifts. This reactivity enables companies to seize opportunities and resolve issues before they escalate. Batch systems often fail in fast-paced environments because they provide insights only after long processing cycles.

Being reactive also reduces operational and reputational risk. Companies can correct errors before they affect customers or disrupt operations. This responsiveness enhances trust with stakeholders and improves customer satisfaction. By enabling instant action, real-time systems turn raw data into immediate business value, helping organizations stay ahead in dynamic markets.

3.4. Supporting Multitasking Operations

Real-time data processing supports multitasking by allowing systems to handle multiple data streams and tasks simultaneously. This capability is crucial for modern enterprises managing complex operations across global networks. Unlike batch systems that stages of data processing sequentially, real-time platforms can analyze numerous data flows at once without performance loss.

This multitasking ability improves productivity and operational continuity. Teams no longer wait for one process to finish before starting another. It also ensures consistent service quality even during peak workloads. By enabling concurrent processing, real-time systems allow enterprises to operate at scale, maintain responsiveness, and serve customers seamlessly across channels.

3.5. Meeting Critical Deadlines Reliably

Real-time data processing helps organizations meet strict deadlines by ensuring data is processed and delivered instantly. In industries where time sensitivity is crucial, missing a deadline can create costly disruptions or compliance issues. Real-time systems eliminate waiting times that often slow down traditional batch processing.

Meeting deadlines builds customer trust and operational reliability. Teams can coordinate confidently because they know data will always be available on time. This reliability also supports automation, allowing systems to execute time-bound tasks without human intervention. By guaranteeing timely delivery, real-time systems improve service quality, compliance, and customer satisfaction simultaneously.

3.6. Operating Independently Across Systems

Real-time data processing operates independently, meaning systems continue processing data without waiting for large batches or manual triggers. This independence creates uninterrupted data flows, even when network conditions fluctuate or workloads spike. It also allows different system components to function without depending on a central scheduler.

This autonomy improves system resilience and scalability. Independent processing prevents single points of failure from halting operations and allows faster recovery during incidents. It also simplifies infrastructure, enabling teams to scale components individually based on demand. By working independently, real-time systems keep enterprise operations running smoothly and continuously, even under changing conditions or heavy workloads.

Want to adopt real-time data processing in business?

Let Savvycom guide your journey from concept to successful implementation.

4. Common Use Cases Across Industries

Real-time data processing is transforming business operations across diverse sectors. The following sections explore how finance, healthcare, retail, and logistics industries leverage real-time systems to reduce risk, improve customer experience, and drive operational efficiency on a global scale.

4.1. Real-Time Data Processing In Finance

Real-time data processing enables banks to detect fraudulent transactions within milliseconds by data analytics in banking industry instantly. It helps prevent losses before they occur. In algorithmic trading, real-time systems execute orders at lightning speed based on live market conditions. This ensures traders capitalize on micro price changes ahead of competitors.

4.2. Real-Time Data Processing In Healthcare

Hospitals use real-time data processing to monitor patient vitals from connected devices continuously. When anomalies appear, systems send instant alerts to clinicians. This early detection allows timely interventions, reducing complications and readmissions. With tech innovations in healthcare, real-time systems improve patient safety, operational efficiency, and healthcare quality across critical care environments.

4.3. Real-Time Data Processing In Retail

Real-time data processing helps retailers adjust prices instantly based on demand, competitor activity, and market trends. It also tracks inventory levels across stores in real time, preventing stockouts and overstocking. By aligning supply with live demand, retailers boost revenue, cut costs, and enhance customer experiences through timely product availability.

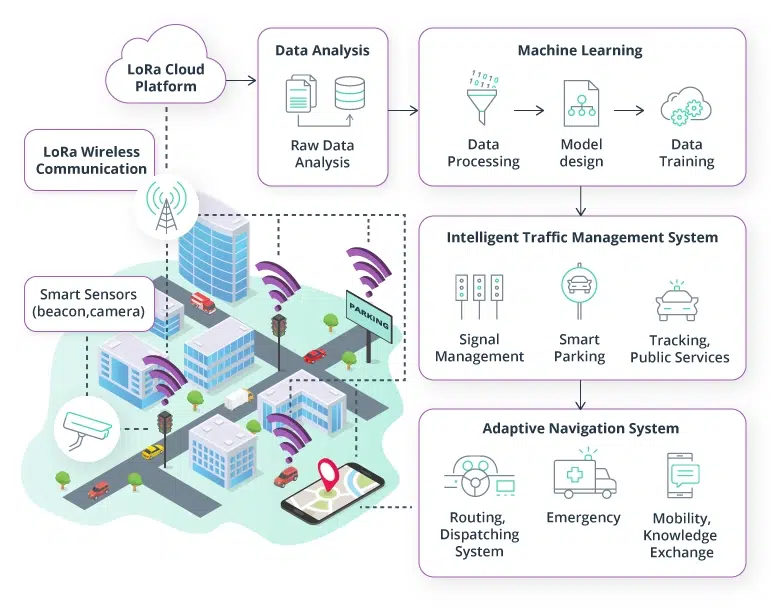

4.4. Real-Time Data Processing In Transportation

Transport networks use real-time data processing to control traffic signals dynamically, easing congestion and improving safety. Fleet operators track vehicles live to optimize routes and fuel usage. Real-time systems adapt to changing conditions instantly, cutting travel times, reducing emissions, and boosting the efficiency of both passenger and freight operations.

5. Challenges Enterprises Face When Adopting Real-Time Systems

Adopting real-time data processing promises speed and agility, but many enterprises face significant challenges. Building real-time systems requires complex infrastructure, specialized skills, and strict governance. The table below outlines the most common obstacles organizations encounter when implementing real-time systems, helping decision-makers prepare strategies to overcome them effectively.

| Challenge |

Description |

Impact on Enterprises |

| High Infrastructure Costs |

Real-time systems demand powerful servers, storage, and continuous computing resources. |

Increases upfront investment and ongoing operational expenses significantly. |

| Data Integration Complexity |

Combining diverse real-time data streams from multiple sources is technically difficult, especially when transitioning from legacy systems. A comprehensive database migration checklist becomes essential to ensure seamless data flow during system upgrades or platform changes. |

Creates delays in deployment and increases the big data risk of inconsistent or incomplete data. |

| Scalability and Performance Limits |

Handling massive real-time data volumes without latency requires advanced architecture. |

Performance bottlenecks can reduce user experience and limit business growth. |

| Skill Gaps in Workforce |

Real-time systems need specialized engineering and data architecture expertise. |

Lack of skills slows development and increases project failure risk. |

| Security and Compliance Risks |

Real-time pipelines handle sensitive data across networks continuously, requiring robust secure data encryption protocols to protect information as it flows through various processing stages. |

Raises exposure to cyberattacks and regulatory non-compliance penalties. |

| Maintenance and Monitoring Effort |

Systems require constant monitoring to prevent downtime or data loss. |

Increases operational workload and raises long-term management costs. |

6. How to Successfully Implement Real-Time Data Processing

Deploying real-time data processing requires careful planning, robust technology, and skilled teams. Success depends on strong architecture, the right tools, and ongoing monitoring. The following steps outline how enterprises can build reliable real-time systems that support business growth, reduce risks, and deliver continuous insights across all operations.

6.1. Building a Scalable Data Architecture

A strong architecture forms the backbone of reliable real-time data processing. It must handle constant high-speed data without failures. A scalable architecture enables real-time systems to grow effortlessly while preserving reliability and performance as data volumes increase.

- Use distributed and event-driven designs: Microservices and event streaming support parallel processing and horizontal scaling.

- Deploy high-throughput data pipelines: Tools like Apache Kafka or Amazon Kinesis maintain stable data flow under heavy loads.

- Adopt in-memory computing: Platforms like Redis or Hazelcast reduce latency by avoiding slow disk access.

- Leverage cloud-native infrastructure: Dynamic resource scaling ensures the system adapts to fluctuating workloads.

- Design for fault tolerance: Replication and automatic failover prevent outages and minimize data loss.

6.2. Choosing the Right Tech Stack and Development Partner

Selecting the right technologies and partner accelerates successful real-time data processing implementation. Wrong choices can delay progress and raise costs.

- Evaluate technology performance: Check for low latency, high availability, and seamless integration with existing systems.

- Use proven frameworks: Apache Flink, Spark Streaming, and Kafka ensure stable and mature stream handling.

- Prioritize security features: Encryption, access control, and compliance support must be built in from the start.

- Leverage cloud managed services: AWS, Azure, and GCP simplify setup and reduce operational overhead.

- Collaborate with expert developers: Skilled partners bring industry experience, best practices, and faster implementation timelines. Many enterprises work with specialized big data consulting firms to navigate the complexities of real-time system architecture and ensure optimal performance from the start.

6.3. Ensuring Robust Monitoring and Maintenance

Continuous monitoring keeps real-time data processing systems healthy and reliable over time. Without it, issues can disrupt operations unexpectedly.

- Implement observability tools: Track throughput, latency, and error rates in real time.

- Set proactive alerts: Notify teams instantly when anomalies or system failures occur.

- Enable auto-scaling: Adjust resources automatically to handle traffic spikes smoothly.

- Maintain audit logs: Capture all activities for faster troubleshooting and regulatory compliance.

- Conduct regular stress tests: Detect performance bottlenecks before they impact users.

- Assign a dedicated support team: Provide 24/7 operational oversight and incident response.

At Savvycom, we specialize in building custom software development solutions that support advanced stream processing and scalable real-time architectures. Our experienced developers combine domain expertise with cloud development to deliver robust, secure, and high-performance platforms. As your trusted partner, we help enterprises unlock the full potential of real-time systems, from planning and development to long-term maintenance.

Savvycom is right where you need. Contact us now for further consultation:

- Phone: +84 24 3202 9222

- Hotline: +1 408 663 8600 (US); +612 8006 1349 (AUS); +84 32 675 2886 (VN)

- Email: [email protected]

What is an example of real-time data?

Common examples include credit card fraud detection during transactions, live stock trading prices, GPS navigation traffic updates, social media streaming feeds, and IoT sensor data from manufacturing equipment or smart devices.

Is OLAP realtime?

Traditional OLAP (Online Analytical Processing) is not real-time as it typically processes pre-aggregated historical data. However, modern real-time OLAP systems can provide near real-time analytics by continuously updating data cubes and using in-memory processing.

Who uses real-time data?

Financial institutions for fraud detection, e-commerce platforms for personalized recommendations, healthcare providers for patient monitoring, transportation companies for route optimization, manufacturers for quality control, and logistics firms for supply chain management.

What is the difference between online and real-time data processing?

Online processing refers to interactive systems where users can access and query data immediately, but processing may still have delays. Real-time processing specifically requires data to be processed and results delivered within strict time constraints, typically milliseconds to seconds.